How to Build a GenAI Application with EDB Postgres AI Factory: A Step-by-Step Guide

Imagine having a virtual assistant that could securely supercharge your enterprise’s productivity, empowering your customers, partners, and employees with instant access to critical information. Now, picture being able to build and deploy such an assistant within minutes, using simple point-and-click interfaces, all while maintaining complete control over your data and AI assets.

In this tutorial, we'll take you on a journey through EDB Postgres AI Factory, showing you how to transform this vision into reality. We'll demonstrate how to build a secure, powerful virtual assistant that keeps your data sovereignty intact while delivering exceptional value to your organization.

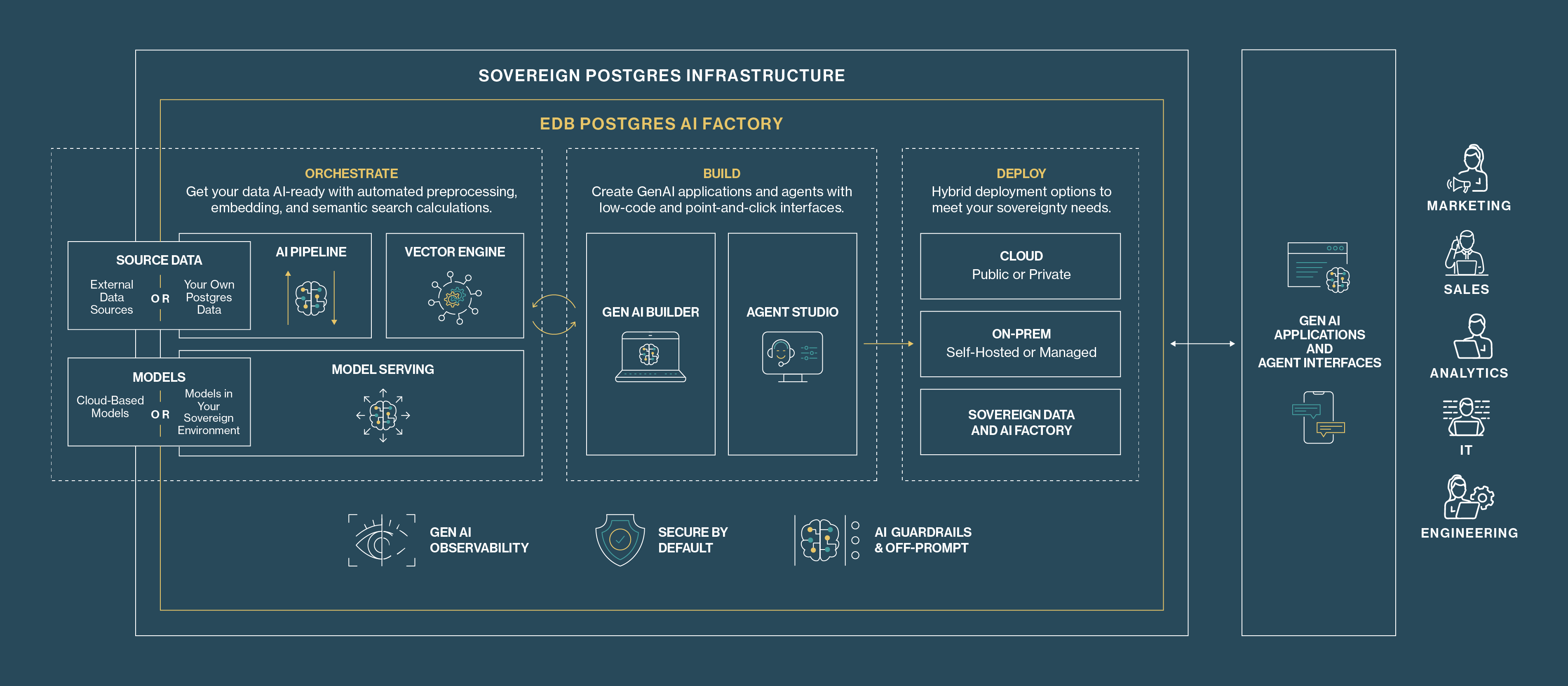

AI Factory Overview

EDB Postgres AI Factory is the fastest way to securely build, test, and launch sovereign AI applications. It changes the process of building GenAI applications from the foundation up. Our unified GenAI inferencing and agentic platform eliminates the complexity that's been holding your AI initiatives back, enabling you to deploy production-ready applications in weeks instead of months while maintaining complete data sovereignty and governance.

AI Factory combines five integrated components that work seamlessly within your existing Postgres environment to provide comprehensive AI capabilities for all your GenAI applications.

- GenAI Builder is a platform that lets teams create AI solutions using simple interfaces. It enables development of secure GenAI applications through a point-and-click interface for business users and a Python SDK for developers to build complex integrations and workflows for nearly any task you can imagine — and some you haven't thought of yet!

- Agent Studio capabilities make it possible to build AI agents tailored to your requirements that can work independently to complete tasks — with the same flexible GenAI Builder interface options.

- AI Pipeline simplifies AI Knowledge Base creation and management. It automatically keeps your data synchronized and embeddings up to date with minimal code.

- Vector Engine securely stores AI and business data in Postgres. It provides fast semantic search capabilities without sending data to external services.

- Model Serving offers on-premises AI model deployment with flexible scaling. It allows easy model switching while optimizing hardware usage for maximum efficiency.

In this tutorial, we'll explore several of these components to guide you through creating an end-to-end GenAI application — a virtual assistant that provides conversational interfaces to business knowledge. Let's begin by setting the stage.

Use Case

ACME Bank is planning to deploy a virtual assistant powered by AI Factory to empower their front-line employees with instant access to customer insights and intelligence.

Consider Mike, an account executive at ACME, who will use the AI assistant daily to analyze customer data, identify upsell opportunities, review product feedback, and craft personalized outreach emails that resonate with clients.

ACME Bank values data and AI sovereignty and security, planning to deploy AI Factory closer to its source data systems within an on-prem data center. The AI factory will use two sources of data primarily to feed the virtual assistant:

- Product reviews left by customers about ACME products stored in a Postgres table.

- Object store that keeps internal product catalogs, regulations, memos, etc.

The following sections will outline the step-by-step process of using the AI Factory to build the virtual assistant for ACME Bank.

Step 1: Creating Knowledge Bases with AI Pipeline

AI systems rely on high-quality data inputs. Better data quality and AI optimization lead to more accurate responses from chat models. For this reason, we will use the AI pipelines and the GenAI Builder to prepare source data and transform them into usable Knowledge bases.

A Knowledge base in the AI Factory serves as the chat assistant's source of truth. When data enters the knowledge base, it's automatically vectorized and ready to be accessed by chat assistants. This vectorization process converts text into numerical representations that capture semantic meaning, enabling fast and efficient similarity searches. As a result, the chat assistant can quickly find and retrieve the most relevant information when responding to user queries.

AI Factory offers two methods for creating Knowledge bases. You can use the GenAI Builder's visual point-and-click interface, or you can create them declaratively with AI Pipelines using familiar Postgres SQL. Both approaches are equally effective, and we'll explore both to demonstrate their capabilities.

First, let’s use the AI Pipeline to create a Knowledge base out of the customer_reviews table, which stores the product feedback left by customers. The table has the following schema:

CREATE TABLE reviews (

review_id SERIAL PRIMARY KEY,

customer_name VARCHAR(100) NOT NULL,

customer_email VARCHAR(100),

date date,

review TEXT NOT NULL

);

A few records from the table look like this:

INSERT INTO reviews (customer_name, customer_email, date, review) VALUES

('Julia Scott', 'julia.scott@example.com', '2025-01-24 06:52:44', 'I love my ACME Rewards Card! The cashback on groceries and dining really adds up. Redeeming points is super easy.'),

('Bob Smith', 'bob.smith@example.com', '2025-01-09 06:52:44', 'The ACME Elite Card is totally worth the annual fee. Free lounge access saved me during a long layover.'),We will write the following SQL query to create our first Knowledge base out of it.

SELECT aidb.create_table_knowledge_base(

name => 'acme_reviews_kb',

model_name => 't5',

source_table => 'customer_reviews',

source_data_column => 'review',

source_data_type => 'Text',

source_key_column => 'review_id',

distance_operator => 'Cosine'

);

SELECT aidb.bulk_embedding('acme_reviews_kb');

SELECT aidb.set_auto_knowledge_base('acme_reviews_kb', 'Live');The aidb.bulk_embedding() operation initiates the initial vectorization process for existing data in the customer_reviews table. The aidb.set_auto_knowledge_base() operation enables auto-vectorization for any future changes to the source table.

We also used the t5 embedding model, which is one of the embedding models built into the AI Pipeline runtime. Using a built-in model makes embedding generation more efficient and keeps data from being sent to external models — ensuring data sovereignty and security.

Step 2: Creating Knowledge Bases with GenAI Builder

If you're a data professional who prefers working with declarative languages like SQL, AI Pipeline provides a familiar Postgres environment for creating Knowledge bases. For those who prefer a visual approach, the GenAI Builder offers a point-and-click interface to create and populate Knowledge bases from any structured, semi-structured, or unstructured data source.

Next, let's create another Knowledge base using the GenAI Builder to store vector embeddings of ACME's product catalog — which contains internal information about ACME credit card products.

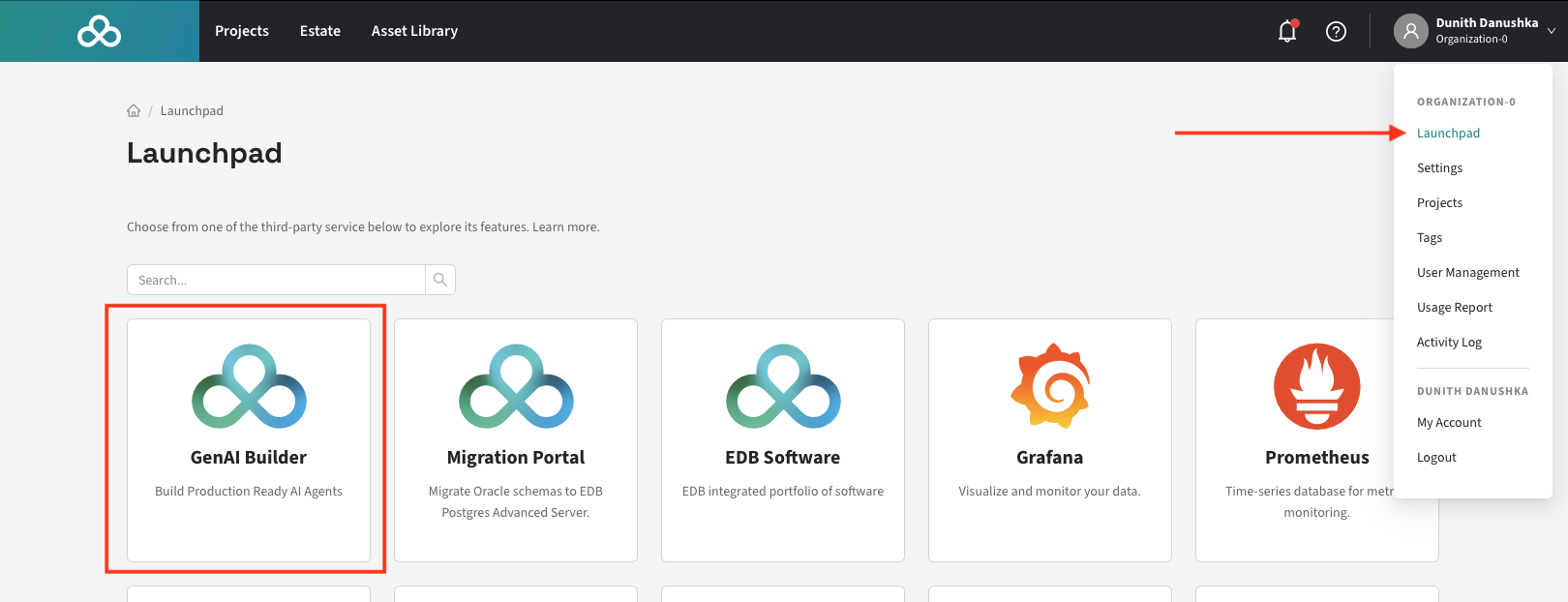

To access the GenAI Builder, click the Launchpad link under your profile in the EDB Postgres AI Console. Then select the GenAI Builder icon on the left.

Once you land on the GenAI Builder, you can start the process by creating a Data Source and uploading files into it. Go to Data Lake > default and create a new folder with the name “product-catalog”. Upload this PDF file into that folder, which is a fictitious product catalog specially crafted for ACME bank.

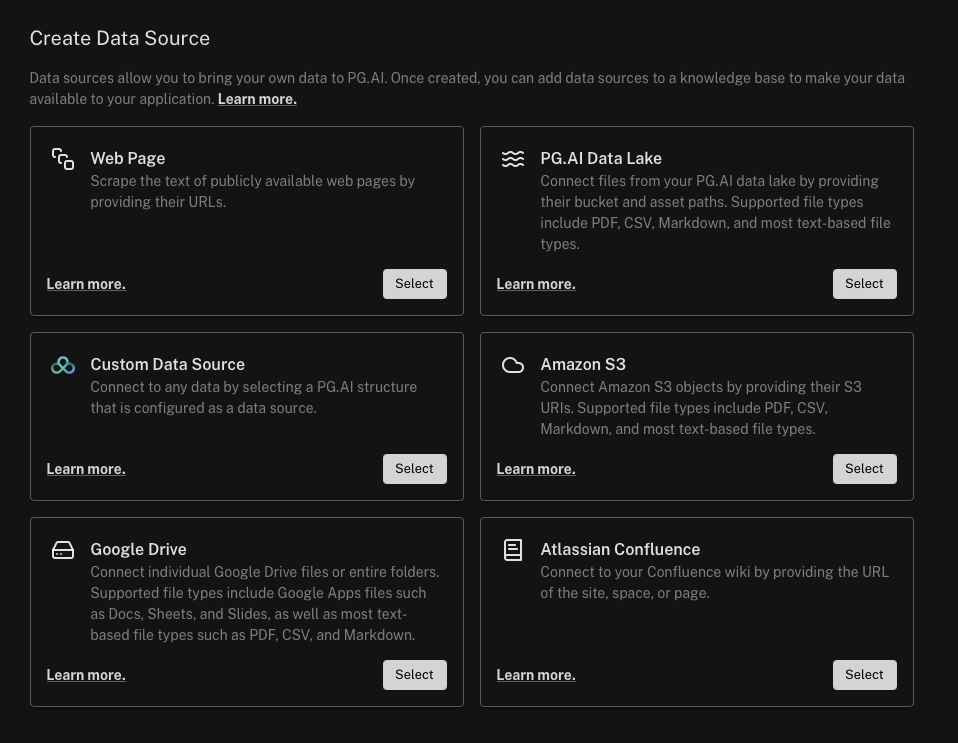

Next, let’s create a new data source out of this folder. Go to Libraries > Data Sources and click “Create Data Source” in the top right corner. Choose “PG.AI Data Lake”.

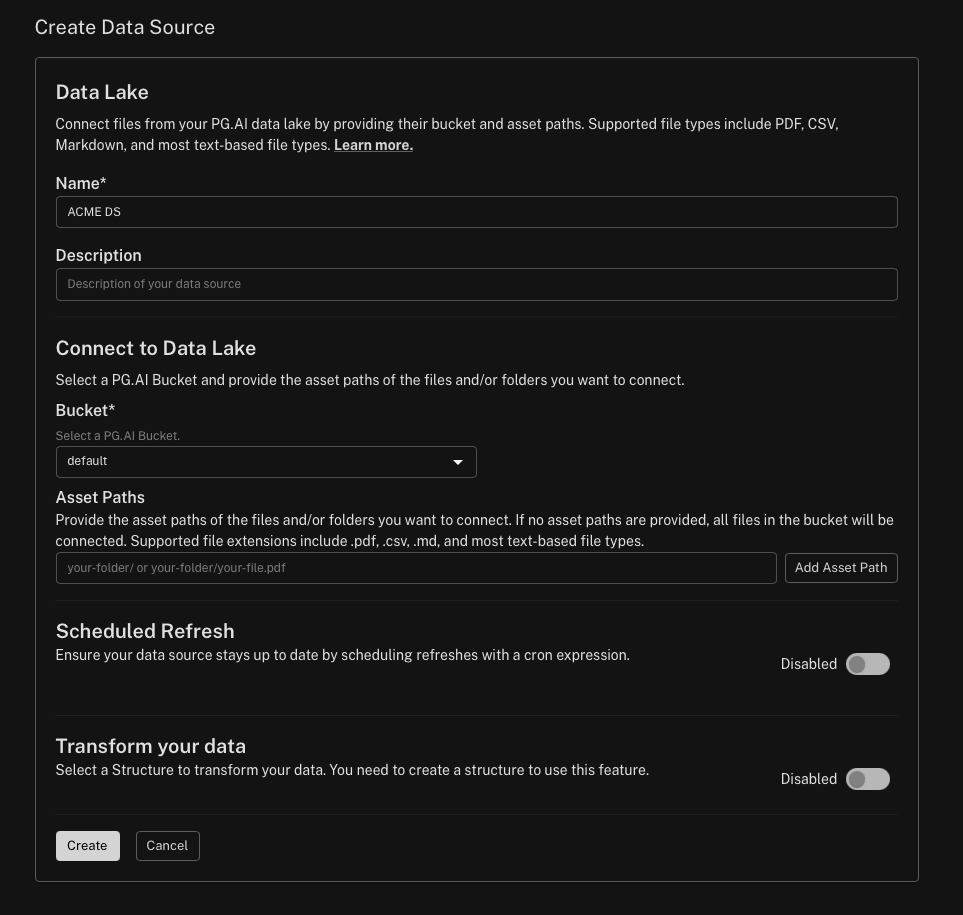

Give your data source an appropriate name. Choose default as the bucket name and leave other settings as it is.

When done, click “Create” and the PDF file will be fetched from the bucket and processed so that vector embeddings are available for semantic search.

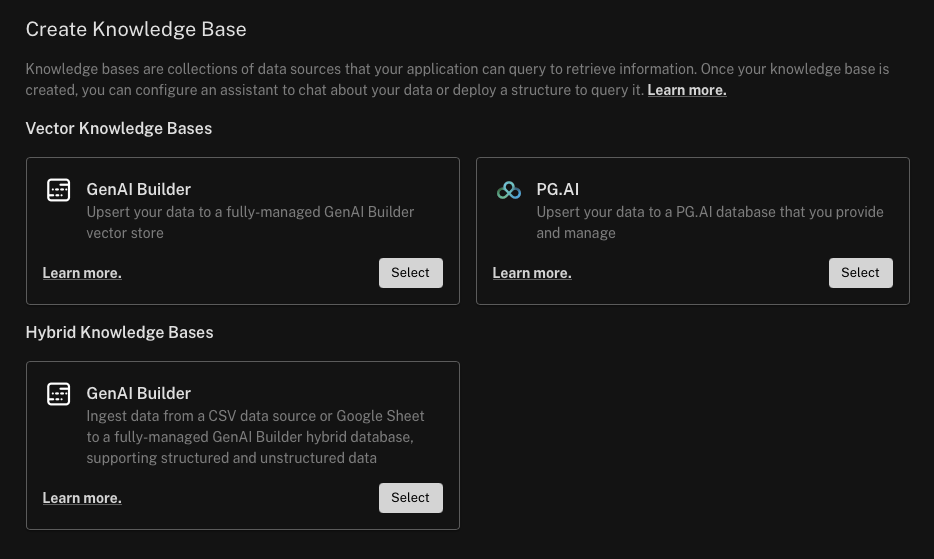

Next, let’s create a Knowledge base out of this data source. Navigate to the “Libraries > Knowledge Bases” screen and click “Create Knowledge Base”. Choose Vector Knowledge Bases > GenAI Builder to create a fully-managed GenAI Builder vector store.

Give your Knowledge base a name and choose to populate it from the data source that we just created from the catalog PDF file in the data lake.

If you check the Knowledge bases list, you will see the Knowledge base we created earlier with the AI Pipelines is also listed there, along with the one we just created before. This demonstrates the seamless integration between different AI Factory components, giving you the flexibility to choose the best tool for the job based on your preferences and requirements.

Step 3: Creating the Assistant

Now that we got our data sources and Knowledge bases prepared, let’s go ahead and create an Assistant to wire things together. An Assistant is an entity that can converse with humans while utilizing an LLM, Knowledge bases, and Tools (which we will discuss later) to achieve its goals. With GenAI Builder’s point-and-click interface, you can build and deploy a fully functioning assistant in just minutes.

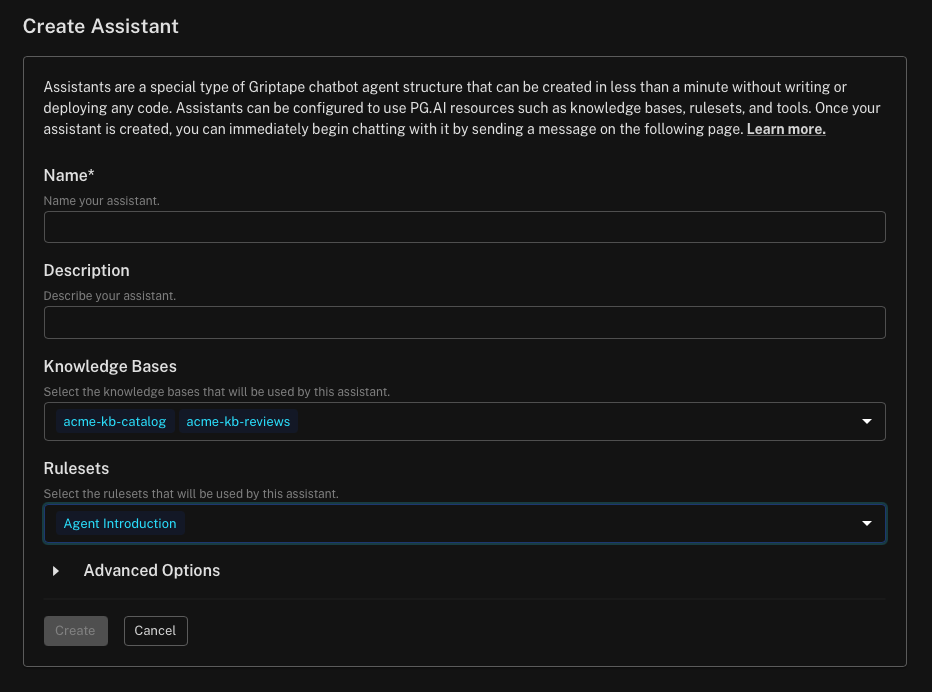

In the left sidebar, click on the Assistants menu, then click Create Assistant to open the creation dialog. An Assistant requires several key parameters, with the most important being:

- Knowledge base(s) - One or more Knowledge bases containing vector embeddings. The Assistant searches these Knowledge bases first when formulating responses.

- Ruleset - A set of natural language rules that govern the Assistant's behavior, similar to a system prompt given to an LLM. For example, you can create a rule instructing the Assistant to introduce itself at the start of each conversation.

As shown below, create an Assistant by selecting the two Knowledge bases we created earlier.

Step 4: Testing the Assistant

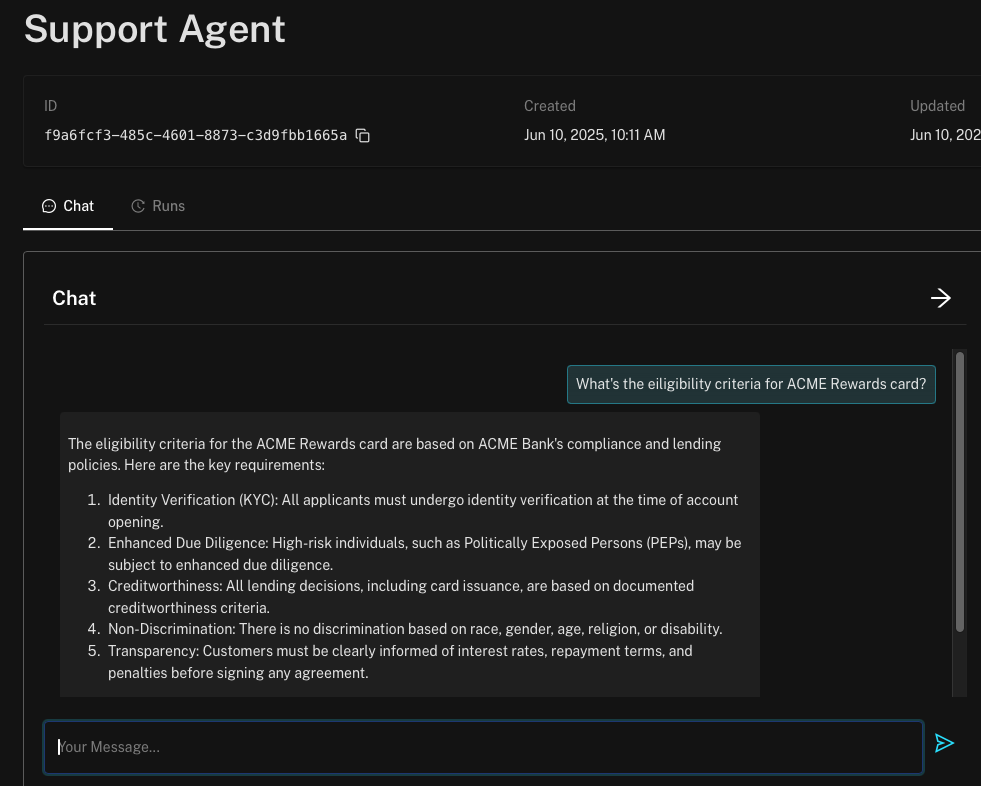

You can test the Assistant immediately after its creation. Let’s take a practical example for verification.

The ACME product catalog is a lengthy document containing various product definitions, rules, and regulations. Imagine you're Mike, an Account Executive at ACME bank, who needs to quickly find the eligibility criteria for the ACME Rewards credit card. He asks the Assistant: "What are the eligibility criteria for the ACME Rewards credit card?"

The Assistant responds similar to this:

This response draws from the product catalog PDF file we uploaded earlier.

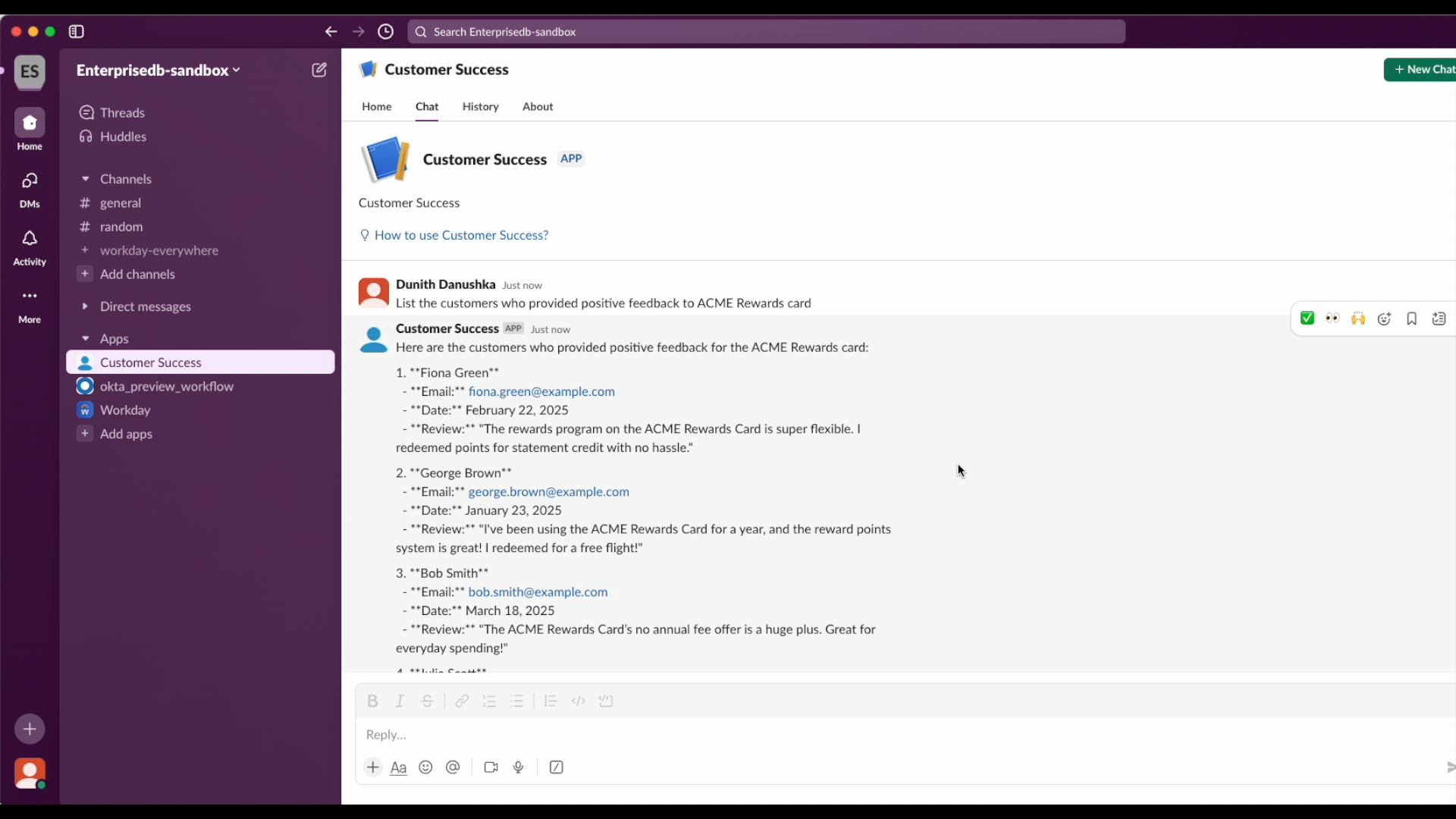

Mike can also retrieve a list of customers who have expressed positive sentiment about the ACME Rewards card by asking: "List the customers who liked the ACME Rewards card." The Assistant extracts this information from the customer reviews.

As you can see, the Assistant effectively combines information from both Knowledge bases we created.

Step 5: Configuring LLMs

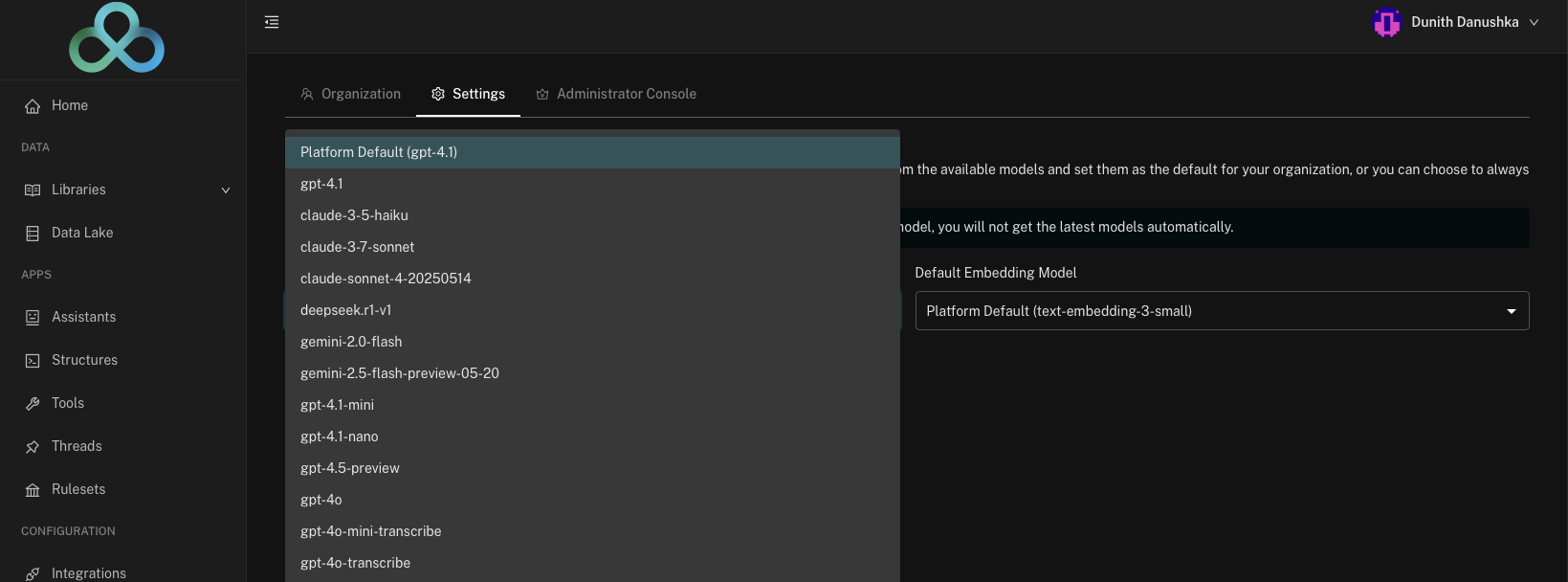

AI Factory allows you to choose from a wide selection of LLMs from providers including OpenAI, Google, NVIDIA, and many more, giving you the flexibility to choose the best model to build your chat assistant. This includes chat, embedding, and reranking models with different parameters. The better the LLM you use, the better the response your assistant produces.

Step 6: Integrating Assistant with Enterprise Applications

The final step is integrating the assistant with enterprise applications to make it accessible to frontline business users across departments for their daily work.

AI Factory enables AI assistants to be embedded in enterprise applications like Slack, allowing users to discover business insights and make predictions through natural conversations without leaving their preferred tools. Currently, AI Factory supports exporting assistants as Slack applications.

To set up Slack integration, navigate to the Integrations menu in the sidebar and click Create Integration. This will launch the configuration process to generate a Slack application that can be installed on your business Slack workspace.

Wrapping It Up

The assistant's capabilities can be further enhanced by connecting it to Tools. For example, Mike could use a Tool that directly queries the CRM system to identify customers eligible for credit card upgrades based on their spending patterns and credit history. When Mike asks "Which of my customers are good candidates for upgrading to the ACME Platinum card?", the assistant can analyze customer data in real-time and provide actionable insights.

AI Factory significantly reduces the time and effort required to build production-ready GenAI applications. As demonstrated in this tutorial, you can create a fully functional AI assistant with access to enterprise knowledge bases in just minutes, not months. This accelerates time to market and delivers faster business value.

Most importantly, AI Factory achieves all this while maintaining complete control over your data and AI assets. Your sensitive business information never leaves your secure environment, and you retain full sovereignty over your AI models and knowledge bases.

To learn more about how AI Factory can transform your organization's AI capabilities, read our comprehensive launch blog here, or review the documentation.