KEY POINTS

AI in radiology promises efficiency but struggles with validation and practical implementation.

Diverse datasets and government action are crucial for developing unbiased AI models, according to Ashoka University's Suvrankar Datta.

Current AI models often double radiologists' workload due to the need for rechecking flagged images.

Datta remains optimistic about AI's long-term potential to enhance radiology workflows and patient care.

"The promise of AI in radiology is significant and time-saving, but the current reality? We often find ourselves spending double the time just validating its findings."

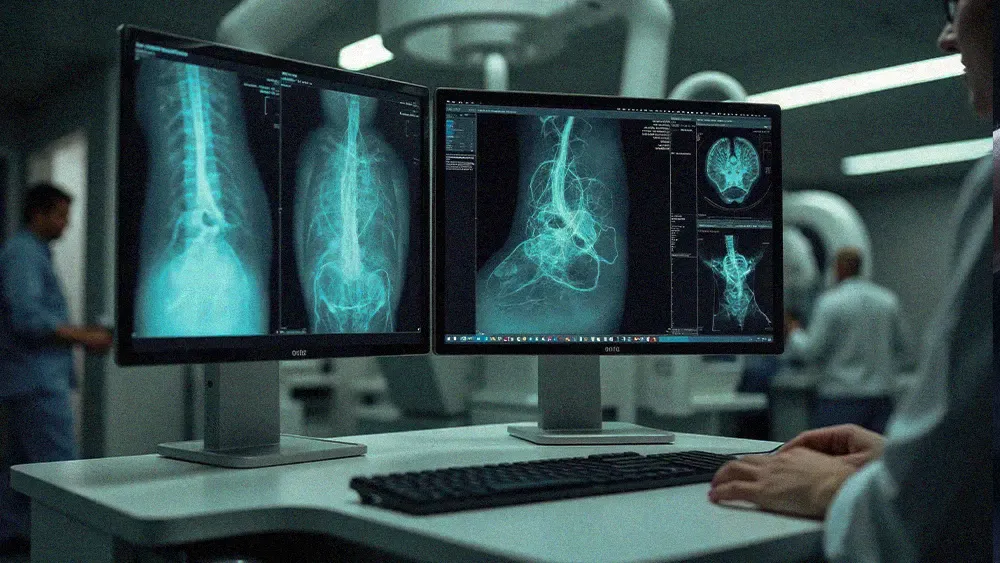

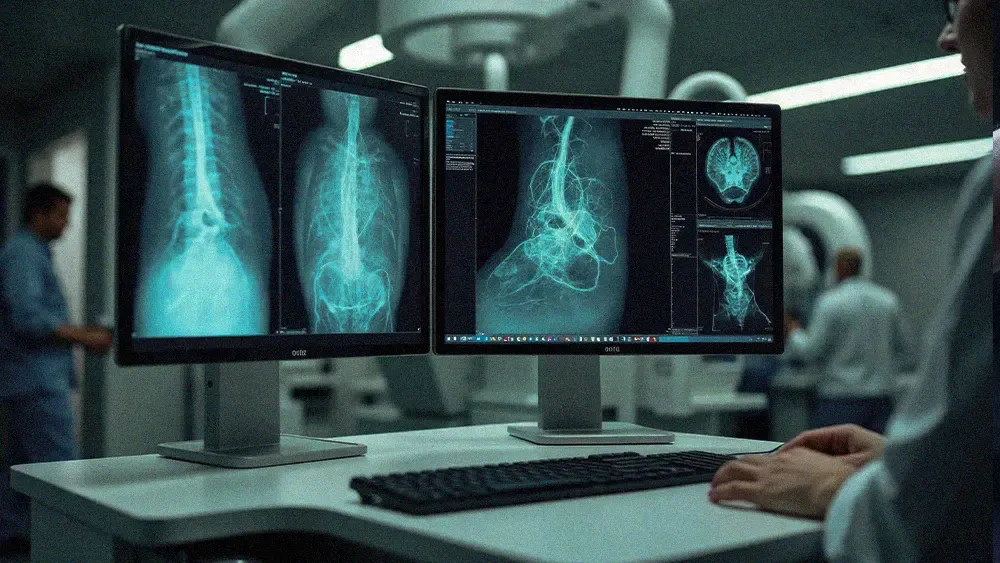

Radiology’s AI revolution looks inevitable on paper: faster diagnoses, sharper insights, streamlined workflows. On the ground, however, the vision of seamlessly augmented workflows frequently collides with the hard reality of validating the machines, a journey proving more demanding than many anticipated.

Suvrankar Datta—Faculty Fellow at Ashoka University, radiologist, and AI researcher—works at the crossroads of medicine and machine learning. His take: The future is coming, but it’s messier, slower, and far more human than the hype suggests.

The double work dilemma: "The promise of AI in radiology is significant and time-saving, but the current reality? We often find ourselves spending double the time just validating its findings," says Datta. "We're still waiting for robust evidence that these tools reliably reduce workload or deliver clear ROI."

Datta sees a widening gap between innovation and implementation. "There are a lot of amazing startups building in the vision domain, but they're not making it into clinical practice," he says. In India, that disconnect is largely due to fragmented workflows. Rather than lightening the load, most AI models force radiologists to recheck every flagged image—doubling the work instead of cutting it.

No patient left behind: "We misunderstand what AI can and cannot do," says Datta. "You cannot take a vanilla AI model and use it in your own practice without changing the thresholds." A model built for screening, for instance, is tuned to flag a broader range of potential abnormalities than one built for definitive diagnosis. But this shift in context isn’t always accounted for in practice.

It's a disconnect that breeds doubt. "We haven’t reached the level of AI accuracy yet where we can say with a 100% guarantee that it hasn’t missed anything," he adds. "And that’s why people aren’t using it much—because you can't let a single patient down." Even when AI flags the abnormal, what’s deemed "normal" often still needs a second look.

"We haven’t reached the level of AI accuracy yet where we can say with a 100% guarantee that it hasn’t missed anything. And that’s why people aren’t using it much—because you can't let a single patient down."

Hurdle after hurdle: "Without good data infrastructure that spans broad communities and regions, it’s very difficult to build unbiased AI models," Datta says. Current datasets skew heavily toward a few U.S. regions, leaving many populations underrepresented. "We need diverse data. That’s not happening yet. Government needs to be proactive," says Datta.

But even with better data, adoption isn’t guaranteed. "The bigger hurdles are cultural and financial," he adds. For smaller practices, there’s little business case to justify the cost of AI tools on top of existing radiologist salaries—especially when time savings remain unproven. "Once more evidence emerges, adoption will follow."

Early wins: Though progress on larger fronts faces obstacles, AI already provides some benefits. "The biggest benefit of AI currently is in the drafting of preliminary reports," Datta says. "Instead of starting from scratch we begin with a preliminary report, review the scan, and make changes. That’s been the biggest timesaver so far."

Innovating for impact: Datta's research pushes the boundaries of what AI can responsibly achieve in radiology. He's exploring hyper-personalization of reports to match individual radiologist styles, investigating and mitigating racial, gender, or community-based biases in AI models, and developing leaderboards to evaluate AI model accuracy for specific use cases. Another key area is "creating reliable and affordable agentic workflows," focusing on smaller, computationally efficient models that can run on standard PCs at low cost.

Bullish long-term: "Optimizing workflows, decreasing administrative work, and creating patient-centric reports in the patient's own language using LLMs"—this is where Datta anticipates the biggest impact in the next six to 12 months. He hopes such advancements bring radiologists closer to their patients.

Despite ongoing obstacles, Datta is "extremely bullish about the capabilities of AI in the long run," he says. "They are only increasing." He envisions a future where, once accuracy and reliability are achieved, radiologists will spend more time on "cognitively useful things for patients" and "actually be able to extract all possible insights."