Code challenge!

The setup: You are connected to your database via psql. You have just figured out a SQL query that will answer this question "What is the first name and two-letter country code for every user whose last name begins with the character 'R', sorted by first name and then country code?" Here is that SQL statement:

select u.first_name,

a.country_abbrev

from users as u

join addresses as a on u.address_id = a.id

where u.last_name like 'R%'

order by u.first_name,

a.country_abbrev;The challenge: From your application language of choice, find the most streamlined way to deserialize the results of this query into a form that can be sent to a front end. (The front end might be HTML, JSON, or Protobuf, so for now just pick a representation that could trivially be further transformed into one of those.)

I'm going to use Go, because that's what I'm most familiar with.

First, I'm going to assign the SQL query to a multiline string literal.

query := `

select u.first_name,

a.country_abbrev

from users as u

join addresses as a on u.address_id = a.id

where u.last_name like 'R%'

order by u.first_name,

a.country_abbrev

`Already, my choice is controversial. For the past quarter century, developers have been inundated with messages that using an ORM is a "best practice". Or at least a query builder. No raw SQL!

However, 1) the coding challenge is to go as lightweight as possible, and 2) we've already figured out how to do this in SQL, and we have deadlines, so why would we make this more difficult than necessary?

Now I need to connect to Postgres from my code, which first means I need a library. In the Go ecosystem, that would be pgx. (pgx's only one-time competitor, pq says to use pgx, so the choice is simple.)

Having chosen a library, I need to connect to Postgres.

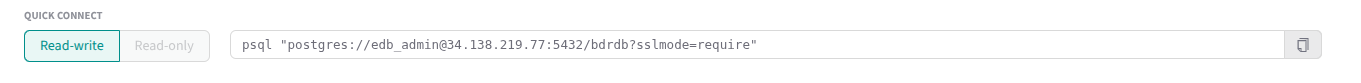

In EDBPGAI's Hybrid Control Plane, you'll see that we list psql connection commands using a connection URI:

pgx can use such URIs directly. Here's how I connect to my local postgres instance on my laptop:

pool, err := pgxpool.New(

ctx, "postgresql://postgres:postgres@localhost:5432/postgres")

if err != nil {

log.Fatalf("Unable to establish connection: %v", err)

}Because it takes the same amount of code to create a pool as it does a single connection, I have opted for the pool. In a production environment would we hard-code the URI? Definitely not. We would use a secure method of passing in the URI. In a production environment, would we log an error and quit on a connection error? Likely not, but it's good enough for illustrative purposes.

I need to deserialize the results of my query. Each row has a first name and a two letter country code, so let's just make a struct to hold those:

type NameState struct {

FirstName string

State string

}A list of these structs can hold our query results.

Again, I am courting controversy! Shouldn’t I be using an ORM to map this result set to the inevitable full-fledged User or Address objects I have in my application? An obvious difficulty is determining how a result set that draws equally on users and addresses — and uses only some of the attributes of each, rather than returning complete users or addresses — can be represented with either a User object or an Address object. While ORMs can be useful in some scenarios, they aren’t the most streamlined solution in scenarios like the one I’m illustrating here. It’s easier just to use a struct specifically for this result set.

Let’s dig in a little more here.

If we are trying to streamline our code, we need a new perspective, and it is this: Postgres is not an object store, it is an answer engine.

Another way to put it: Postgres is a service whose API accepts arbitrary SQL queries and returns result sets.

In fact, there are many times in my job that I just use this “PostgreSQL service” directly via psql. This is great for “one-off questions”, where the only code I need to write is SQL! I get my answer and I move on with my day.

Sometimes there are questions that get asked repeatedly, and whose answers need to be handed off for further processing (such as to a front end for prettier formatting). I formalize these questions into microservice endpoints.

For those of us writing gRPC microservices, Postgres’s question-and-answer service paradigm fits well with gRPC’s request-protobuf-message-and-response-protobuf-message service paradigm.

Let’s come back to my NameState struct. The above thinking is why, instead of using more generic user and address objects, I’m just deserializing to a struct that is purpose-built to answer just this question.

(Side note: In the microservices I have written, I actually deserialize straight into protobuf response messages — which may in turn be embedded in a final wrapper protobuf response message — with no intermediary types. I’m trying to keep this example as simple as possible, though, so my NameState struct is simplest.)

Here's how we run our query:

rowFetcher, err := pool.Query(ctx, query)

if err != nil {

log.Fatalf("Unable to run SQL query: %v", err)

}And here's how we deserialize our result set from our rowFetcher:

nameStates, err := pgx.CollectRows(rowFetcher, pgx.RowToStructByPos[NameState])

if err != nil {

log.Fatalf("Unable to collect rows: %v", err)

}You'll notice that the above code does not check a connection out of a connection pool, nor return it. It's still happening, but modern libraries like pgx take care of all of that for us — for both the happy path, and the sad path when errors happen. We keep our goal of streamlined code.

Likewise, you won't see any hand-written deserialization code. Here, we use pgx.RowToStructByPos, which uses column position and struct field order to deserialize the query results for us. (pgx has a rich set of deserialization functions, where you can deserialize by matching SQL result set column names to struct field names. But again, I’m keeping it simple here.) The bottom line is, we no longer need extra library bloat for deserialization. We keep our goal of streamlined code.

Here is the entire code of my Go program with three lines of added code at the end, where I loop through the query results and print them for the user:

package main

import (

"context"

"fmt"

"log"

"github.com/jackc/pgx/v5"

"github.com/jackc/pgx/v5/pgxpool"

)

type NameState struct {

FirstName string

State string

}

func main() {

query := `

select u.first_name,

a.state

from users as u

join addresses as a on u.address_id = a.id

where u.last_name like 'R%'

order by u.first_name,

a.state;

`

ctx := context.TODO()

pool, err := pgxpool.New(

ctx, "postgresql://postgres:postgres@localhost:5432/postgres")

if err != nil {

log.Fatalf("Unable to establish connection: %v", err)

}

rowFetcher, err := pool.Query(ctx, query)

if err != nil {

log.Fatalf("Unable to run SQL query: %v", err)

}

nameStates, err := pgx.CollectRows(

rowFetcher, pgx.RowToStructByPos[NameState])

if err != nil {

log.Fatalf("Unable to collect rows: %v", err)

}

for _, nameState := range nameStates {

fmt.Printf("Name: %s, State: %s\n",

nameState.FirstName, nameState.State)

}

}If you use a higher-level language like Python or Ruby, you might be chuckling and saying "Scripting languages have been able to do this since forever. I will crush this coding challenge!"

One thing that surprised me when I worked on a large Ruby codebase was that Active Record (a sort of ORM-ish library) was used, instead of doing the Ruby equivalent of the above. It seems that just because something can be done the more streamlined way, doesn't guarantee that it is done the more streamlined way.

I've always wondered if Java's initial complexity around database interactions lead to complex, heavyweight libraries (such as ORMs and query builders) proliferating not just in Java's ecosystem, but in all programming language ecosystems.

One of my favorite things about the rise of Go is that a lot of the advice in that community seems to be "just use SQL". And having a major player do that seems to give developers permission to "just use SQL" in other languages --- permission they might not have had before, due to long-followed, unquestioned "best practices".

It's also interesting to look back at history and see predictions that didn't pan out. I remember the prediction that all web sites/services would end up being written in super powerful high level scripting languages, because those languages made developers so much more productive. It turns out that never exactly came to pass, because performance continued to matter too much. (Web scale!) But what did happen was lower-level, more performant languages copied features from higher-level languages that make the above streamlined code possible in a language like Go.

So what are those features that more performant languages copied from scripting languages?

- Multiline string literals. Even Java got this feature with version 13 in 2019! Multiline string literals make it easy to paste SQL into your application code: SQL that you got working in a

psqlsession. (Pro tip: don’t forget to format your SQL so the next maintainer can read it! With great power comes great responsibility.) - Enough reflection/magic to make automated deserialization possible. Not only do I remember doing column-by-column deserialization in my early Java code, I was doing an easier version of column-by-column deserialization in Go with pgx version 4; but pgx version 5 has taken it to the next level: give pgx your struct and a pgx mapping function, and the deserialization is figured out for you.

As an added bonus, all language ecosystems, static and dynamic, have a lot of accumulated knowledge about what makes RDBMSs easier to interact with, and this keeps getting pushed down into the most basic SQL libraries we interact with. pgx's user-friendly handling of connection pooling and error handling is not unique to Go; but it is a reflection of many years of experience of making database interactions more and more frictionless.

From time to time, it's good to re-assess how we do even the most basic things. When it comes to interacting with Postgres, it may be time to ask yourself: are you doing it in the most streamlined way possible?