One of the first tasks in adopting a new database platform is copying legacy data to that new platform.

EDB Postgres AI Hybrid Manager has tooling (Data Migration Service, aka DMS) that will make this process easier by handling a lot of the complexity for you.

While simple migration scenarios lend themselves well to Postgres's bundled tools that we know and love (e.g. Postgres-to-Postgres migration using pg_dump and pg_restore, more complicated scenarios can be quite a challenge.

Imagine migrating from a legacy Oracle database to a Postgres cluster in Hybrid Manager.

Imagine migrating from one version of Postgres to a newer version of Postgres, but wanting the newer Postgres to be a continuously updated copy of the older Postgres.

Now imagine wanting your legacy Oracle database's data to be copied to a Hybrid Manager hosted Postgres instance, and continuing to stream live changes from that legacy Oracle database into your new Postgres instance.

There are certainly tools out there that allow this. Debezium, for instance. All one has to do is first install Kafka, then start Zookeeper... wait, this is already starting to require some serious infrastructure and some effort!

Hybrid Manager's tooling takes care of a lot of this for us.

Let's imagine a scenario where I have a legacy on-prem Postgres 16 database. I want to copy my existing data to Postgres 17 in HCP, and I want all subsequent data changes to stream from my on-prem Postgres 16 to my HCP-hosted Postgres 17.

As an experiment, I ran Postgres 16 on my laptop to mimic an on-prem legacy database, and I created a Postgres 17 cluster in HCP, to mimic my destination database. (It would have been fun to try an on-prem Oracle database, but I don't have an Oracle license handy, so an older version of Postgres will have to do.)

I used pg_dump to dump just the table definitions (no data!) of my legacy schema, and I used psql to load the empty schema into my Postgres 17 database in Hybrid Manager. (I should note that EDB has software that can port legacy Oracle schemas to Postgres schemas, but seeing as I was just going Postgres-to-Postgres, I took the easy route here.)

The next thing I did was download EDB's Change Data Capture Reader (cdcreader). The cdcreader is a nifty piece of software that will do what its name says: capture any changes to your data and report those changes to HCP. HCP, in turn, uses a Debezium/Kafka/Zookeeper stack (that you don't have to set up!) to stream data into your new destination database.

I had to follow these instructions to ensure my legacy Postgres would play nicely with the cdcreader. It was pretty straightforward stuff: ensure I had logical replication enabled in my Postgres, create a Debezium user and a migration role, then grant access to the tables I wanted to migrate.

One interesting gotcha is that my legacy Postgres needed to run with SSL enabled. I still custom-compile my Postgres installations (a habit I formed back in the day), and I had to re-compile with SSL enabled, and then self-sign some certs.

There were still some steps left: cdcreader is a Java application, so I needed to be sure I had Java installed (which I already did).

I needed to download some migration creds from HCP so that my cdcreader instance could communicate securely with my HCP stack.

I needed to modify the cdcreader wrapper script to use those creds, and to point at my legacy database.

Despite these steps, it was still much, much easier than finding a place to host and set up Kafka, and to learn the intricacies of Debezium! For roughly 30 minutes of work, I got all of this functionality.

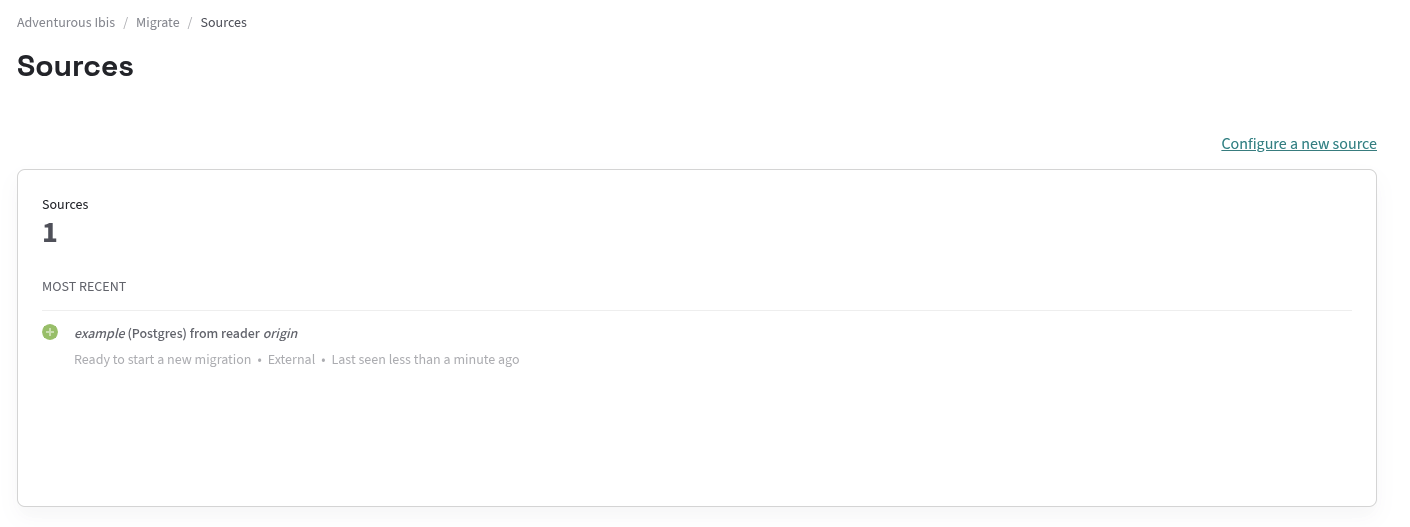

Now I ran ./run-cdcreader.sh. I went to my HCP console and navigated to my Migration Sources, and saw that I had a migration source!

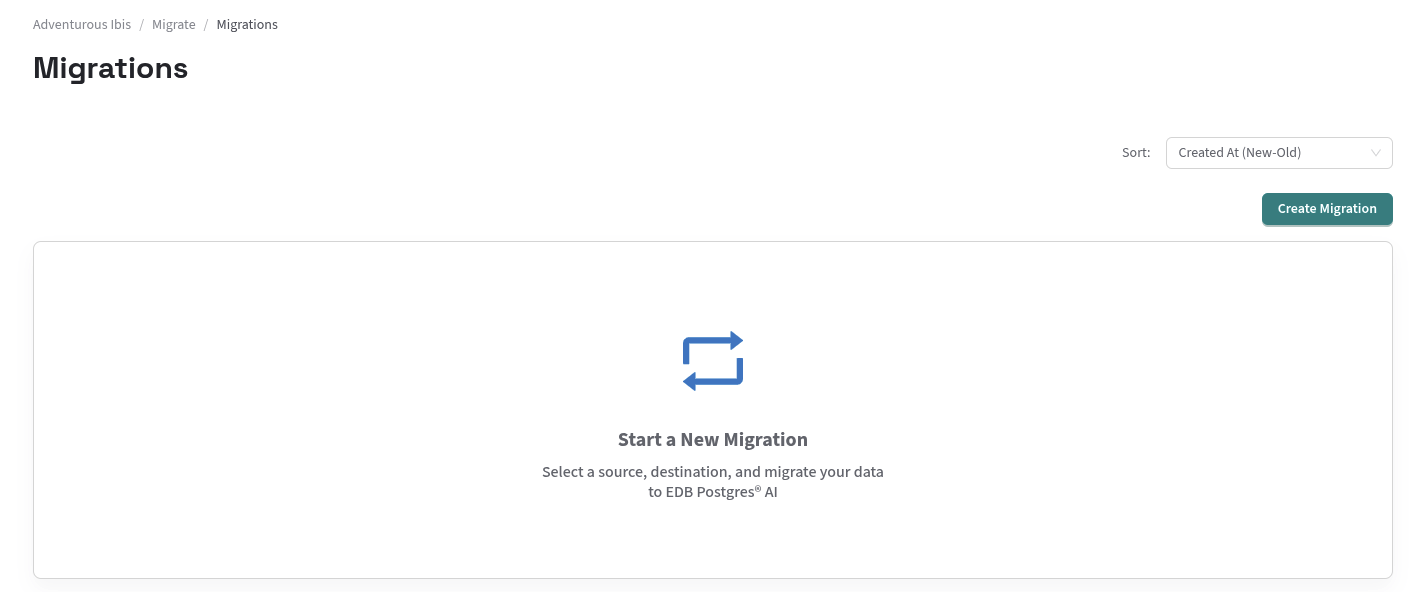

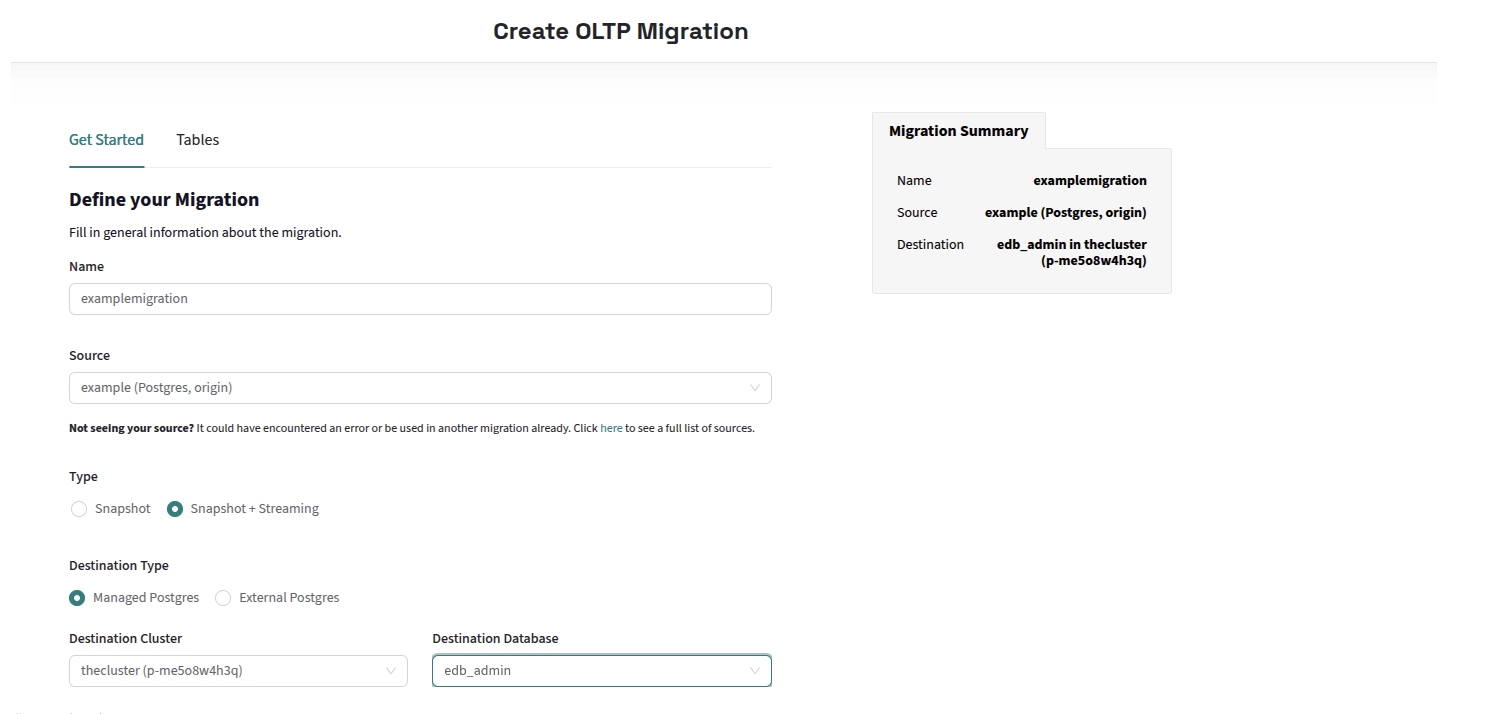

The rest was easy: from the comfort of the HCP console, I set up a new migration from my Migration Source to my Postgres 17 instance.

Start a new migration

Create migration

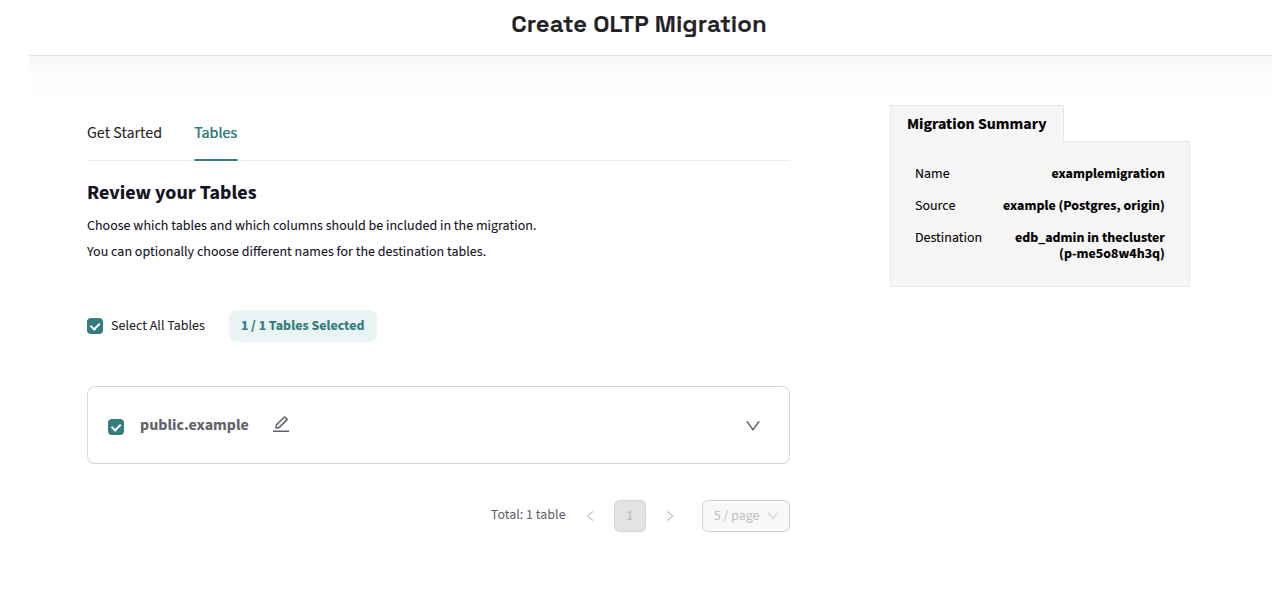

Usefully, I got to select which tables from my source that I wanted to migrate. (My test schema had only one table, so you can guess which choice I made!)

Review your tables

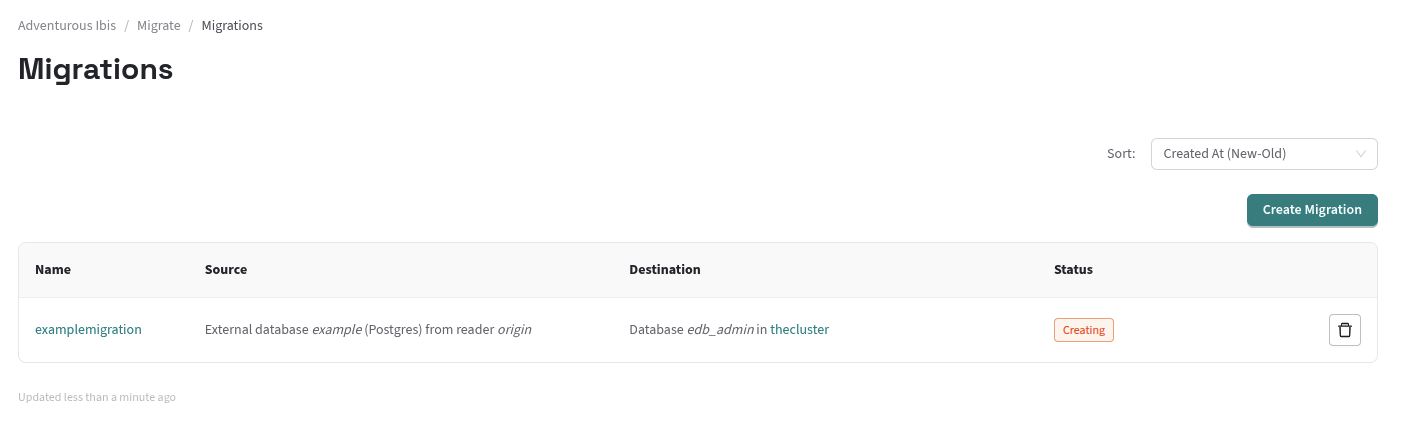

Creating Migrations on Migrations page

Because I had such a small amount of data for this experiment, the replica caught up immediately, and I got down to having some fun: I opened psql sessions in both my legacy and my HCP Postgres instances, and started making changes in my source data, adding rows, deleting rows, etc. I then ran SELECT statements to see that my destination data were being changed to keep up with my legacy data. Neat stuff!

One thing I have found is that once the cdcreader is set up and pointed at my legacy database, and when the cdcreader appears in the HCP web UI as a Migration Source, the hard part is over: it is fairly trivial to delete migrations, start new ones, or even delete entire Pg clusters, re-load my dataless legacy schema, and then start a new migration. Experimentation becomes easy.

This ability to experiment makes me feel more confident when I consider real-world switchover plans for production databases. Particularly for small-ish databases behind microservices (and the related quick data transfer times), the ability to try different scenarios is very freeing. Copies of your microservices could be spun up, pointed at the new database, and have their behavior validated to increase confidence about the switchover. Different scenarios could be tried before committing to any one switchover strategy.

Meanwhile, your legacy database keeps chugging along, having its data changes captured and streamed to your HCP Postgres databases.