In Part 1, we explored the fundamentals of building a simple AI agent and understanding its core logic. Part 2 revealed the performance bottlenecks and scaling issues that arise as agents become more complex and capable.

This article (Part 3) focuses on a practical strategy for optimizing agent development. We'll show how to move beyond the limitations of monolithic agents by applying smart architectural patterns, modular design. The goal is to help you build agent systems that are robust and maintainable.

The Problem: Performance Challenges of a Monolithic Agent with Increasing Tools

Let's quickly recap the problem from Part 2. Our single, monolithic agent had to know everything. With every query, it loaded the context for every tool it had. This led to three major issues:

- Token Explosion: Prompts became bloated with irrelevant information, driving up costs.

- "Choice Paralysis": The agent struggled to pick the right tool from a long list, leading to errors.

- Slow Response Times: Bigger prompts meant more processing time for the AI model.

Simply put, the "one agent to rule them all" approach doesn't scale. It's time for a better way.

The Solution: From One Clunky Agent to a Team of Specialists

The fix is a concept you already know from the real world: specialization. Instead of one generalist who knows a little about everything, we'll build a team of specialists, each an expert in its own domain.

Here's the new setup:

- Specialist Agents: We'll have an AccountAgent that only handles user info and an OrderAgent that only deals with orders. Each has its own small, focused set of tools.

- Coordinator Agent: A new "manager" agent will sit on top. Its only job is to look at an incoming request and route it to the correct specialist.

This is our modular, multi-agent architecture. The coordinator agent acts like a smart dispatcher, and the specialists do the heavy lifting in their respective areas.

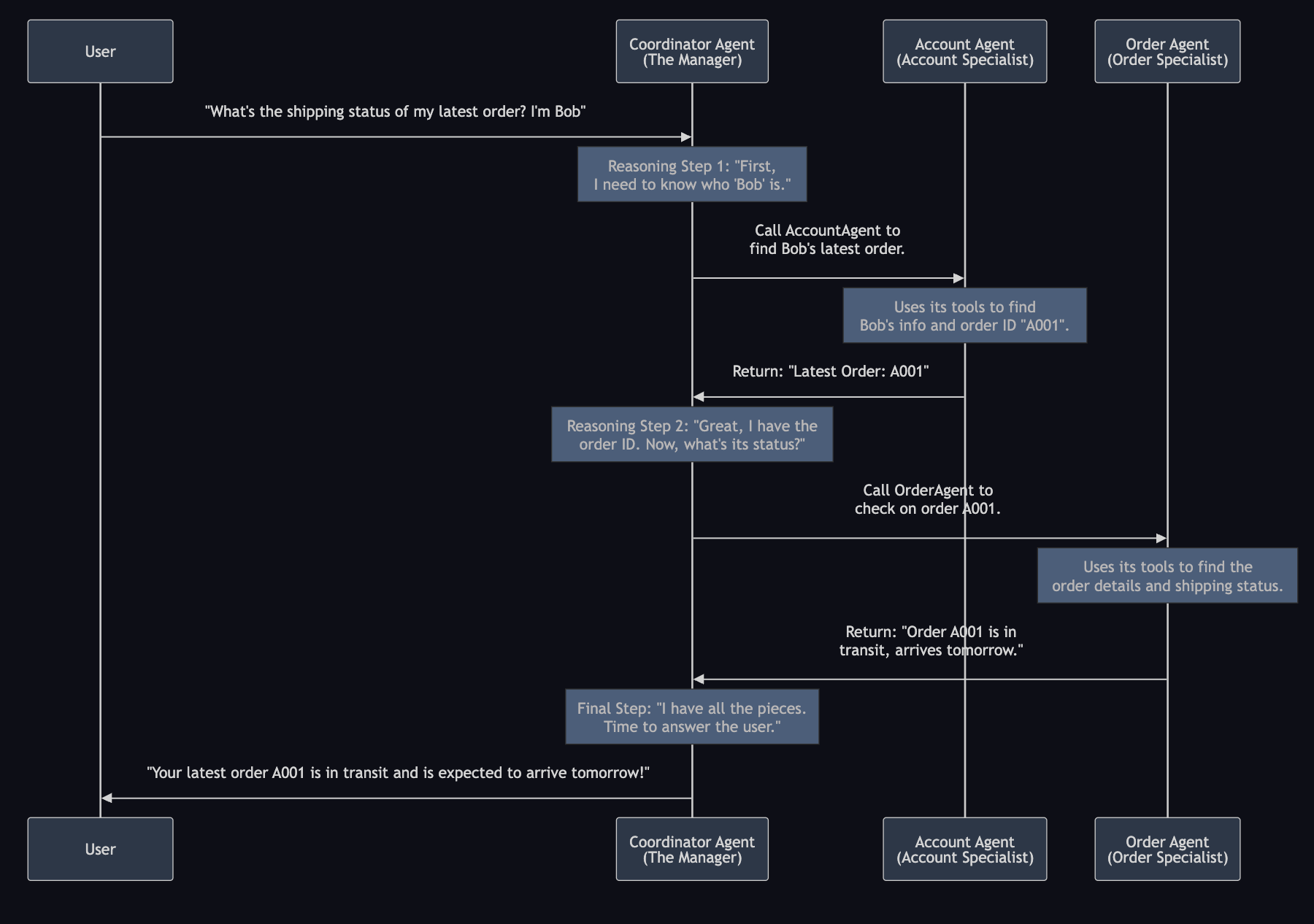

The Blueprint: How It Works in Practice

Let's visualize the workflow for the query: "What's the shipping status of my latest order? I'm Bob."

This looks more complex, but it's actually far more efficient. The coordinator agent makes simple, high-level decisions, and the specialists make simple, low-level decisions. No single agent is ever overwhelmed.

Let's Build It: A Step-by-Step Guide

Step 1: Build the Specialist Agents

First, we create our two specialists: account_agent and order_agent. Each one is a standard ReAct agent, but with a very limited set of tools.

from langchain_openai import ChatOpenAI

from langgraph.prebuilt import create_react_agent

model = ChatOpenAI(

model=os.getenv("MODEL_NAME", "openai/gpt-4o"),

api_key=os.getenv("OPENAI_API_KEY"),

base_url=os.getenv("MODEL_BASE_URL"),

)

# The Account specialist, focused only on user-related tools

account_agent = create_react_agent(

model=model,

tools=[get_user_info, get_user_orders],

prompt="You are a customer service assistant. Help users with account inquiries.",

)

# The Order specialist, focused only on order-related tools

order_agent = create_react_agent(

model=model,

tools=[get_order_details, check_shipping_status],

prompt="You are a customer service assistant. Help users with order inquiries.",

)

Step 2: Wrap Agents as Tools for Coordination

To enable collaboration, we wrap each specialist agent as a tool. This allows a coordinator agent to route queries to the right specialist, keeping the system modular and extensible.

Now, we need a way for the coordinator agent to call these agents. We do this by "wrapping" each agent in a BaseTool class. This makes the account_agent and order_agent look like regular tools that the coordinator agent can use.

from langchain_core.tools import BaseTool

class AccountTool(BaseTool):

"""Tool wrapping user_agent"""

name: str = "AccountTool"

description: str = """

Handle user account related queries and operations, can retrieve user information and recent orders.

Parameters:

- query: String containing user query, need user ID as input.

Returns:

A string containing the user's information or recent orders with order_id.

"""

def __init__(self, user_agent):

super().__init__()

self._agent = user_agent

def _run(self, query: str) -> str:

"""Execute user management operations."""

try:

result = self._agent.invoke({

"messages": [("user", query)]

})

return result["messages"][-1].content

except Exception as e:

return f"Account agent error: {str(e)}"

class OrderTool(BaseTool):

"""Tool wrapping order_agent"""

name: str = "OrderTool"

description: str = """

Handle order related queries and operations, need order ID as input

Parameters:

- query: String containing order query, need order ID and destination as input.

Returns:

A string containing the order's details or shipping status.

"""

def __init__(self, order_agent):

super().__init__()

self._agent = order_agent

def _run(self, query: str) -> str:

"""Execute order management operations."""

try:

result = self._agent.invoke({

"messages": [("user", query)]

})

return result["messages"][-1].content

except Exception as e:

return f"Order agent error: {str(e)}"

Step 3: Coordinator Agent for Smart Routing

The coordinator agent acts as the "dispatcher," analyzing user requests and routing them to the appropriate specialist agent(s). This pattern keeps the system organized and enables easy scaling as new domains are added.

coordinator_agent = create_react_agent(

model=model,

tools=[AccountTool(account_agent), OrderTool(order_agent)],

prompt="You are a customer service assistant. Help users with account and order inquiries.",

)And that's it! We've built a multi-agent system.

Putting Our Multi-Agent System to the Test

Time for the moment of truth. Let's run the exact same query that caused our monolithic agent to struggle in Part 2. Will our new architecture deliver on its promises?

result = coordinator_agent.invoke({

"messages": [("user", "What's the shipping status of my latest order? I'm Bob")]

})

print(result["messages"][-1].content)Let's trace through what happens step by step. First, here's the coordinator agent in action - notice how it makes intelligent routing decisions:

{

"message": [

HumanMessage(content="What's the shipping status of my order shipped to New York? I'm user Bob"),

AIMessage(

content="",

response_metadata={

"token_usage": {

"completion_tokens": 17,

"prompt_tokens": 173,

"total_tokens": 190,

}

},

"tool_calls": [

{

"name": "AccountTool",

"arguments": '{"query":"User ID: Bob"}',

}

],

),

ToolMessage(

content="I have retrieved the information for User Bob:\n\n- Account Status: Active\n- Subscription Tier: Premium\n- Recent Orders: Order #A001\n\nIf you need further assistance, feel free to ask!",

response_metadata={},

),

AIMessage(

content="",

response_metadata={

"token_usage": {

"completion_tokens": 23,

"prompt_tokens": 239,

"total_tokens": 262,

}

},

"tool_calls": [

{

"name": "OrderTool",

"arguments": '{"query":"Order ID: A001, Destination: New York"}',

}

],

),

ToolMessage(

content="Here are the details for your order A001:\n\n- **Order Details**: The order amount is $299.99, and it is being shipped to New York.\n- **Shipping Status**: The order is currently in transit, with an expected delivery tomorrow.",

response_metadata={},

),

AIMessage(

content="Your order #A001 is currently in transit to New York and is expected to be delivered tomorrow. If you need more assistance, let me know!",

response_metadata={

"token_usage": {

"completion_tokens": 31,

"prompt_tokens": 323,

"total_tokens": 354,

}

},

),

]

}

Total time consumption: 9.49 secondsNow let's peek behind the curtain to see how our specialist agents handled their respective tasks. Notice how each agent only focused on its domain expertise:

==========Account Tool Result==========

{

"message": [

HumanMessage(content="User ID: Bob"),

AIMessage(

content="",

response_metadata={

"token_usage": {

"completion_tokens": 16,

"prompt_tokens": 153,

"total_tokens": 169,

}

},

"tool_calls": [

{

"name": "UserInfoTool",

"arguments='{\"user_id\":\"Bob\"}'",

}

],

),

ToolMessage(

content="User Bob: Acctive account, Premium tier",

),

AIMessage(

content="",

response_metadata={

"token_usage": {

"completion_tokens": 20,

"prompt_tokens": 186,

"total_tokens": 206,

}

},

"tool_calls": [

{

"name": "UserOrdersTool",

"arguments='{\"user_id\":\"Bob\",\"tier\":\"Premium\"}'",

}

],

),

ToolMessage(

content="User Bob recent orders: Order #A001",

),

AIMessage(

content="I have retrieved the information for User Bob:\n\n- Account Status: Active\n- Subscription Tier: Premium\n- Recent Orders: Order #A001\n\nIf you need further assistance, feel free to ask!",

response_metadata={

"token_usage": {

"completion_tokens": 42,

"prompt_tokens": 224,

"total_tokens": 266,

}

},

),

]

}

==========Order Tool Result==========

{

"message": [

HumanMessage(content="Get shipping status for Order #A001"),

AIMessage(

content="",

response_metadata={

"token_usage": {

"completion_tokens": 17,

"prompt_tokens": 160,

"total_tokens": 177,

}

},

"tool_calls": [

{

"name": "get_order_details",

"arguments='{\"order_id\":\"A001\"}'",

}

],

),

ToolMessage(

content="Order A001: $299.99, shipped to New York",

),

AIMessage(

content="",

response_metadata={

"token_usage": {

"completion_tokens": 22,

"prompt_tokens": 199,

"total_tokens": 221,

}

},

"tool_calls": [

{

"name": "check_shipping_status",

"arguments='{\"order_id\":\"A001\",\"destination\":\"New York\"}'",

}

],

),

ToolMessage(

content="Order A001: In transit, expected delivery tomorrow",

),

AIMessage(

content="The shipping status for Order #A001 is currently \"In transit\" with an expected delivery date of tomorrow to New York.",

response_metadata={

"token_usage": {

"completion_tokens": 26,

"prompt_tokens": 240,

"total_tokens": 266,

}

},

),

]

}Success! Our multi-agent system worked flawlessly. The coordinator agent intelligently routed the query to the right specialists, each agent stayed focused on its expertise, and we got a complete, accurate answer. But the real question is: did we actually solve the performance problems from Part 2?

Performance Showdown: Monolithic vs. Modular

Here’s a head-to-head comparison for the same query.

| Metric | Monolithic Agent (From Part 2) | Modular Agent | The Differenece |

| Total Tokens | ~2,759 tokens | ~2,111 tokens | ~23% reduction |

| Response Time | ~12.98 seconds | ~9.49 seconds | ~27% reduction |

| AI Model Calls | 5 calls | 9 calls | More, but smaller calls |

| Reliability | Prone to errors | High | More predictable |

What's Going On Here? The Magic of Isolation

Wait, the modular agent made more AI calls (9 vs. 5), so how did it end up faster and cheaper? The answer is context isolation.

1. Token Consumption Dropped Dramatically

- Monolithic Agent: The old agent was a token-guzzler. It had to load the context of all four tools on every single reasoning step. This is like trying to have a quiet conversation in a room where 20 people are shouting.

- Modular Agent: Our new architecture is smarter. While it makes more calls, each call is tiny and focused. The coordinator agent only knows about its two sub-agents. Each sub-agent only knows about its two tools. By isolating irrelevant context, the total token count drops by over 23%. That's real money saved on API calls.

2. It Got Smarter, More Reliable, and Faster

- Before: The monolithic agent suffered from "choice paralysis." Picking the right tool from a long, confusing list increased the odds of making a mistake.

- After: The coordinator agent makes a simple, high-level choice: "Is this about an account or an order?" Then, the specialist makes another simple choice from its tiny toolbox. This focus drastically improves accuracy and makes the system far more reliable. The smaller prompts also allow the AI model to generate responses faster, contributing to lower overall latency.

The Real Payoff: Scaling for the Future

This is where the multi-agent approach truly shines. Imagine we need to add 10 more tools for handling payments, returns, and inventory.

- Monolithic Agent: Performance would grind to a halt. The prompt size would become enormous, token costs would skyrocket, and accuracy would plummet. It would be unusable.

- Modular Agent: We'd simply create a PaymentAgent or ReturnsAgent and add it as a new tool for the coordinator agent. The existing AccountAgent and OrderAgent would be completely unaffected. The system scales beautifully because the complexity is contained within each specialist.

The bottom line is this: Breaking a complex problem into smaller, manageable pieces isn't just good practice—it's the key to building AI agents that are efficient, reliable, and ready for the real world.

Conclusion: The Journey to Optimized AI Agents

Throughout this series, we've journeyed from building a basic agent to confronting its limitations and finally architecting a sophisticated, scalable solution. The multi-agent, modular design we implemented in Part 3 is a powerful pattern for building robust AI systems.

By splitting a monolithic agent into specialized sub-agents, we gain several key advantages:

- Improved Performance & Lower Cost: Isolating tools within specialist agents drastically reduces the context for each AI call, leading to lower token consumption and faster response times.

- Enhanced Reliability: Agents are less prone to "choice paralysis" when selecting from a small, relevant set of tools, leading to more accurate and predictable behavior.

- Scalability and Maintainability: New capabilities can be added by creating new agents without disrupting existing ones. This makes the entire system easier to scale, debug, and maintain over time.

However, this approach is not a one-size-fits-all solution. The primary benefit of a multi-agent architecture shines when dealing with a large and growing number of tools. If your agent only needs a handful of tools, the complexity of setting up a coordinator agent and multiple sub-agents may not provide a significant return on investment. In such cases, a simpler, monolithic agent can be more than sufficient.

It's also important to note that architectural changes are just one piece of the optimization puzzle. Other advanced methods for improving agent performance include:

- Tool Chaining: Creating predefined sequences of tool calls to make complex workflows more reliable.

- Structured Context: Providing information to the model in a clear, organized format to improve its situational awareness.

- Memory Management: Implementing sophisticated memory systems to reduce redundant tool calls and improve conversational continuity.

In this series, we focused on the sub-agent architecture to demonstrate its profound impact on scalability. Each of these other areas offers its own powerful advantages and warrants its own deep dive.

The key is to choose the right architecture for the job. For complex, real-world applications destined to grow, a multi-agent system provides a solid foundation for success.